Two Risks for Nvidia

"People who operate datacenters think about the cost of operations. Our time to deployment, our performance, our utilization, our flexibility across all these different applications, [allows our total cost of operations to be] so good that even when the competitors' chips are free, it's not cheap enough."

- Jensen Huang

When Apple had $25 billion of annual revenue in 2007, it was growing around 50% thanks to the launch of the iPhone. Google was at a similar scale in 2009 and grew 24% the following year. Microsoft hit that revenue level in 2001 when it was growing about 12%.

Then there's Nvidia. From a base of $27 billion of revenue in calendar 2022 (FY 2023), the company grew 126%. It's expected to grow another 98% this year. There is no precedent for a company this size growing this quickly.

How is it possible?

• Nvidia's products are expensive. A single H100 graphics processing unit sells for around $30,000. HGX servers that combine multiple GPUs with networking, memory, cooling, and other components can sell for $250,000. That's a lot of iPhones.

• Nvidia's customers have huge budgets. Meta plans to spend $35–$40 billion on capital expenditures this year. Microsoft spent $14 billion on capex just last quarter and guided to a material sequential increase.

• We're in an artificial intelligence "land run." Big tech firms are desperate to establish themselves as leaders. AI startups want to find a sustainable niche. Almost every other company is wondering how AI will affect them.

• Nvidia has no real competition (for now). Its chips are the industry standard for accelerated computing. They're the fastest and lowest-cost option, with the most developed software ecosystem.

• GPUs are complex. Nvidia has thousands of patents, specialized engineering talent, and circuit designs honed over 30 years. According to CEO Jensen Huang, the chips and supporting systems in Nvidia's latest supercomputers weigh 70 pounds and include 35,000 components. There is a steep learning curve for anyone hoping to imitate Nvidia's products. Would-be competitors must invest billions of dollars with little idea whether their designs will work.

• The chip is only part of the "total cost of operations." An Nvidia supercomputer can replace an entire legacy datacenter, where the cost of the cabling alone is greater than the Nvidia system. The ability to create savings in other areas gives Nvidia pricing power.

• Nvidia has high fixed costs but low marginal costs. They only need to design a chip once and can then order an unlimited number of copies, which is why earnings have grown so much faster than revenue.

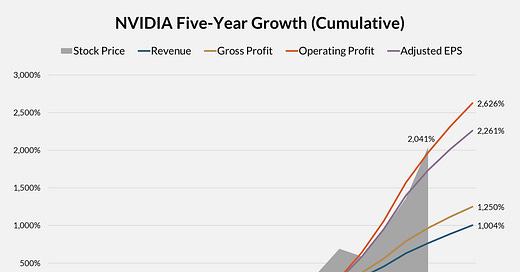

As for the stock, it's up more than 2,000% in a little over four years. Some investors hear that and immediately think "bubble," but Nvidia's earnings have grown just as quickly as the stock price. Hardly anyone saw it coming: As recently as May 2023, consensus estimates called for Nvidia to earn about $0.60/share this fiscal year (adjusting for splits). The current consensus is $2.70, 4.5x higher! The shares are trading for 47x this earnings figure, which seems reasonable for a company growing so absurdly fast.

What could go wrong? I see two main risks for Nvidia, one short-term and the other longer-term:

Short-Term: Are We Overinvesting in Capacity?

If Nvidia is in a bubble, it's more of a fundamental bubble than a valuation bubble. Recent order trends might not be sustainable.

Big tech companies like Meta, Alphabet, and Apple are incorporating AI features in their core products for free. That's going to make it difficult for startups to charge for AI, at least in the consumer market. Enterprises are more willing to pay for enhanced functionality, but software incumbents like Microsoft and Salesforce have a major advantage by leveraging their distribution and incorporating AI in existing workflows. If AI is primarily a cost center—rather than a driver of incremental revenue—it will be harder for startups to raise funding and established companies may start to limit their spending.

It’s also possible that AI simply won't live up to the hype. ChatGPT ignited imaginations when it was first released in November 2022, but usage seems to have flat-lined over the past year. AI-augmented search in Google, Facebook, and Instagram has received mixed reviews. Efforts to use AI chatbots for customer service are mostly in the experimental stage. The biggest problem with any of these efforts is "hallucinations"—we can't yet trust that AI will deliver accurate information, which limits its usefulness.

An additional complication is that each successive generation of GPUs is exponentially more efficient than the last. That’s good for Nvidia in that older products rapidly become obsolete, sustaining demand for new products. But it also means demand for bigger and better AI models has to accelerate faster than the improvement in computing efficiency. If not, customers may opt to do more with less.

For now, GPUs are scarce and many companies are trying to gain a foothold in artificial intelligence. If/when the excitement dies down, budgets may not flow as freely.

Long-Term: Will Nvidia's Biggest Customers Switch to Proprietary Chips?

This is the risk that most concerns me in the long run. The five biggest tech companies besides Nvidia—Apple, Microsoft, Amazon, Meta, and Alphabet—are all working on in-house alternatives to Nvidia GPUs. These companies reportedly account for around 40% of Nvidia's sales.

I believe Nvidia has a wide moat, but I'm not convinced it can’t be breached. Big tech must feel similarly, or they wouldn't be trying to build their own chips. Money is not a constraint: Nvidia spent about $21 billion on research & development over the past three years combined. Each of the five companies mentioned above spent significantly more just last year, and the five combined invested more than $225 billion in R&D! Engineers can be hired away. Nvidia may have trouble retaining its top talent anyway, since many of them are multi-millionaires thanks to the stock's rapid ascent. Manufacturing isn't a sustainable advantage either. TSMC is Nvidia's most important supplier, but the leading foundry is eager to serve any of the big tech companies. Probably the biggest hurdle to switching is Nvidia's software ecosystem. Yet big tech can rewrite their (internal) software as needed, and customize chips for their specific use cases, perhaps achieving better efficiency. Meta has been especially aggressive about releasing open-source AI models that can be run on multiple hardware platforms.

Google launched its first Tensor Processing Unit about a decade ago and seems to use TPUs for all its in-house AI training and inference. Apple wants to do most AI inference on-device, where it has an excellent track record designing proprietary chips, and where Nvidia's chips would be prohibitively expensive. Amazon has its Trainium and Inferentia chips as a low-cost alternative to GPUs. Meta and Microsoft were later to the chip game, but they're working on it and have every incentive to make proprietary chips work. Only time will tell whether these efforts succeed, or if Nvidia's technology, talent, and ecosystem advantages are too great to overcome.

Disclosures

Moatiful is an independent publication of Trajan Wealth, L.L.C., an SEC registered investment advisor. The views expressed are solely those of the author, and may not reflect the views of Trajan Wealth. Nothing in this blog is intended as investment advice, nor is it an offer to buy or sell any security. Posts are for entertainment purposes only and should not be relied on when making investment decisions. Please consult your financial advisor for questions about your personal financial situation. All investments involve risk, including the potential for loss. Historical results may not be indicative of future performance. Data from third-party sources is not guaranteed to be accurate, timely, or complete. Links to external sources are provided for convenience only, and do not constitute an endorsement by Trajan Wealth. Clients and employees of Trajan Wealth may have a position in any of the securities mentioned. Data and opinions are subject to change at any time without notice.